Data Science Process is a systematic process of extracting meaning from data. Any systematic process has to have a series of stages that break down the problem into manageable chunks of work. I would divide the Data Science Process into five stages that are carried out in the shown order.

- Data Collection

- Data Pre-Processing

- Exploratory Data Analysis

- Model Building

- Visualization and Reporting

I will briefly describe these stages in the next few paragraphs, following which is a case-study that should serve as an example for understanding these stages better. If you find the description to be too wordy please feel free to skip to the case-study.

Data is the raw material for creating any Data Product. It is the Oil that power's fact-based and insightful business decisions. As with any raw material, like crude oil it is often found in the depths of the earth or the oceans in a form that is not directly usable (figuratively speaking).

Data is often found in many forms such as historical logs generated by machines (or by humans, for the unlucky Data Scientist), events generated by sensors, archived texts, photos and videos, structured data in databases, emails/chat conversations, X-Ray or MRI reports etc.

They may be found in electronic form in internet servers, local / in-house servers, backup/archival tapes, database servers or in physical form such printed documents, printed photos, taped conversations, hand written logs, XRay/MRI reports etc. Depending on the application / problem being solved data has to be extracted / mined from various such sources before we can started working on it. This is what broadly constitues Data Collection.

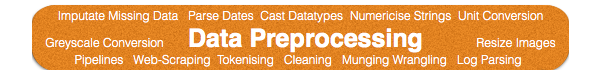

Continuing with the analogy of raw material/crude oil, data is often not found in a readily usable form. Data preprocessing is analogous to refining crude oil or processing physical raw materials that often are contaminated and dirty.

Continuing with the analogy of raw material/crude oil, data is often not found in a readily usable form. Data preprocessing is analogous to refining crude oil or processing physical raw materials that often are contaminated and dirty.

The analytics pipelines and the machine learning models require the data to be in a form that they can understand. One cannot simply throw data in any form to an analytics or a machine learning system and expect meaningful outcome. It has to be cleaned and converted.

For example, a fault log record may be in some proprietary form with data missing in multiple fields. The log records would have to be parsed, using a regular expression engine for example, to extract data from all the fields. Missing data has to be filled in with sensible data (a process called Imputation) since many data systems don't like missing data.

Format conversion or Unit conversion may have to be applied since some fields may have data in different formats, like dates, or in different units, like cm/inches. These are a just few examples to give an idea of what Data Preprocessing entails. Different systems will requires different preprocessing steps depending on the form in which raw data is available.

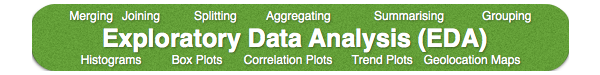

Once the data is cleaned, converted and refined, it is ready for "exploration". The wikipedia definition of the word Exploration is "the act of searching for the purpose of discovery of information or resources". This very aptly describes what Data Exploration is all about - the process of discovering meaning from data.

Once the data is cleaned, converted and refined, it is ready for "exploration". The wikipedia definition of the word Exploration is "the act of searching for the purpose of discovery of information or resources". This very aptly describes what Data Exploration is all about - the process of discovering meaning from data.

Data System/Project is created with the intent of solving some problem using Data. The exploration process is guided by this intent. There may be many "stories" hidden in data. But we are interested in finding out, through the process of exploration, the story that best explains the intent.

Operations like joining, merging, grouping, aggregating etc are performed to understand the distribution and statistical nature of data. The characteristics (categorical or numerical, sparse or dense) of the data influence the EDA process.

Visualizing data in the form of graphs and charts is an inherent part of EDA. Histograms and Box plots are used for visualizing distributions. Line plots and bar plots are used to understand trends. Cartographic maps are used to visualize geographic spread. Scatter plots are used to identify correlations.

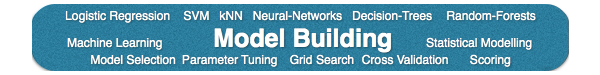

This is the stage where the capability of "learning" from data is infused into the system. EDA helps us understand distributions, correlations and trends in data. But we don't want to stop at discovering trends. We want the system to "learn" from data and then be able to predict/classify new data using that knowledge. This creates true value.

This is the stage where the capability of "learning" from data is infused into the system. EDA helps us understand distributions, correlations and trends in data. But we don't want to stop at discovering trends. We want the system to "learn" from data and then be able to predict/classify new data using that knowledge. This creates true value.

There are a number of Machine Learning Algorithms and Statistical Modeling Techniques that are used to learn from data. For example, using Logistic Regression algorithm we can achieve binary / multiclass classification. This is an example of a Linear Model. Linear Models are limited in their ability to learn non-linear relationships. Non-linear models like Random Forests, Gradient Boosting Machines (GBM), Neural Networks are capable of learning complex, non-linear relationships. Machine Learning and Statistical Modeling is a big area of study and active research.

I will talk about my understanding of some of these algorithms in one of my upcoming posts.

When a Data Scientist works with data she brings her knowledge and intuition acquired over years of experience to bear on the problem at hand. A lot of analysis and number crunching that goes into the process of discovering insights and making machines learn is backed by statistical knowledge and know-how. The final consumers of the outcome are often not well versed with statistics. One of the challenges that a Data Scientist has to address is to convey the findings in a way that a consumer can comprehend.

When a Data Scientist works with data she brings her knowledge and intuition acquired over years of experience to bear on the problem at hand. A lot of analysis and number crunching that goes into the process of discovering insights and making machines learn is backed by statistical knowledge and know-how. The final consumers of the outcome are often not well versed with statistics. One of the challenges that a Data Scientist has to address is to convey the findings in a way that a consumer can comprehend.

The findings are mostly reported in the form of graphs and charts, since distributions, correlations and trends are best understood by visualizing them. In the Python world, matplotlib, pandas convenience functions and Seaborne are widely used. There are other commercial tools like Tableau that can be used for this purpose.

Case Study¶

Let me use a case study to make this process more concrete.I will use Predicting RedHat Business Value machine learning challenge hosted by Kaggle for this purpose. I am using this example because I had participated in challenge and know the dataset well.

Following is an extract of the problem description.

"In this competition, Kagglers are challenged to create a classification algorithm that accurately identifies which customers have the most potential business value for Red Hat based on their characteristics and activities.

With an improved prediction model in place, Red Hat will be able to more efficiently prioritize resources to generate more business and better serve their customers."

I will go through the process of getting the data into memory, cleaning it, exploring it, selecting a suitable Machine Learning algorithm to train the data on, tuning the selected model, performing feature engineering and finally scoring on the test data to see how well the model performs on unseen data.

Libraries used

- Pandas for Data Wrangling

- Scikit-Learn for Model Building

- Matplotlib / Searborn for Visualization

Data Collection¶

In Kaggle comptetions there's not much to do in this stage. We simply download the data from the "Data" page of the competition. The data is generally in CSV form unless it is a competition that has to do with images/audio.

The data for the RedHat competition is available here: https://www.kaggle.com/c/predicting-red-hat-business-value/data. The description of the data can also be found in the same page.

There are four CSV files - people.csv, act_train.csv, act_test.csv and submissions.csv. We will be using only the first three files. The last one is a dummy file that is used to show how the submission file should look like.

I won't describe the files here since I would be repeating what is already said in the competition site. We will instead have a look at the data.

Before we do that let's import some libraries.

import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

%matplotlib inline

people_df = pd.read_csv('../input/people.csv', index_col='people_id')

print('\nShape of People table: %s\n' % (people_df.shape, ))

print('First five rows and columns of People...')

people_df[people_df.columns[:7]].head()

Here's what we did with people.csv in the code cell above.

- We read 'people.csv' into a pandas DataFrame using the pandas function read_csv

- Printed its shape and the first 3 rows of the table using head function to get a sense of what the different columns look like.

Let's repeat the same thing with act_train.csv.

act_train_df = pd.read_csv('../input/act_train.csv', index_col='activity_id')

print('\nShape of act_train table: %s\n' % (act_train_df.shape, ))

print('First five rows and eight columns of act_train...')

act_train_df[act_train_df.columns[:8]].head()

Following is what we can tell about these tables from the first look.

people

- there is time information in the 'date' column, possibly altered (converted to future dates) for privacy/security reasons

- there is some sort of grouping as indicated by the 'group_1' column

- the characteristics of individuals are captured in 38 categorical columns, 10 of which are numerical and the rest boolean

activity

- records are indexed into people table through the 'people_id' column

- there is time information, altered similar to the date column in people table;

- activities are categorized into activity types

- the characteristics of activities are captured in 10 categorical columns - char_1 to char_10; all are of string type and most of them seem to have no data

- the 'outcome' column is the 'label' that is made available in the training data but hidden in the test data; this is what the Machine Learning model/algorithm has to predict.

Data Preprocessing¶

We have read the data and know what it looks like. We are now ready for "preprocessing". This is the stage where we convert/transform data to a form that is suitable for further analysis and learning.

We will first look for tranformations that are common to both the tables so that we can write common code. The following preprocessing steps are applied in the code cells below.

- Imputate missing data

- Convert string type categorical data to their numerical equivalents

- Map boolean people characteristics to thier integer equivalents - False: 0 and True: 1

- Extract category information from 'activity_id' column

- Replace column names that are duplicated in activity and people tables with unique names

- Cast numeric columns to datatypes that are just big enough to accommodate their value range

We will define functions for these preprocessing steps so that they can be used for preprocessing test data later.

def preprocess_act(act_df):

# Impute missing data

act_df = act_df.fillna(value = 'type 0')

# Convert categorical string type columns to their integer equivalents

cat_cols = act_df.columns.difference(['people_id', 'outcome', 'date'])

act_df[cat_cols] = act_df[cat_cols].apply(

lambda x: x.str.split(' ').apply(lambda y: y[1])).astype(int)

# Convert date from string type to Timestamp

act_df['date'] = pd.to_datetime(act_df['date'])

# Process activity_id - split id into category of activity (act1 / act2) and id number

act_id_parts = pd.DataFrame.from_records(

act_df.reset_index()['activity_id'].apply(lambda x: x.split('_')).tolist(),

columns=['act_cat', 'act_id'], index=act_df.index)

act_id_parts['act_cat'] = act_id_parts['act_cat'].map({'act1': 1, 'act2': 2})

act_df = pd.concat([act_df, act_id_parts['act_cat']], axis=1)

# Let's append '_act' to char_# & date cols to differentiate them from People 'char's

char_old_name_new_name_map = {}

for i in range(1, 11):

char_old_name_new_name_map['char_' + str(i)] = 'char_' + str(i) + '_act'

act_df.rename(columns=char_old_name_new_name_map, inplace = True)

act_df.rename(columns={'date': 'date_act'}, inplace = True)

# Convert all columns except people_id and char_10 to np.int8 type.

cast_cols = act_df.columns.difference(['people_id', 'outcome', 'date_act',

'char_10_act'])

act_df[cast_cols] = act_df[cast_cols].apply(lambda x: x.astype(np.int8))

# Convert char_10 to np.int16 type

act_df['char_10_act'] = act_df['char_10_act'].astype(np.int16)

return act_df

act_train_df = preprocess_act(act_train_df)

print('First five rows and seven columns of preprocessed act_train...')

act_train_df[act_train_df.columns[:7]].head()

def preprocess_ppl(ppl_df):

# Convert date from string type to Timestamp

ppl_df['date'] = pd.to_datetime(ppl_df['date'])

# Convert categorical string type columns to their integer equivalents

ppl_numr_cols = ['char_' + str(i) for i in range(1, 10)] + ['group_1']

ppl_df[ppl_numr_cols] = ppl_df[ppl_numr_cols].apply(

lambda x: x.str.split(' ').apply(lambda y: y[1])).astype(int)

# Append '_ppl' to char_# & date cols to differentiate them from Activity 'char's

char_old_name_new_name_map = {}

for i in range(1, 39):

char_old_name_new_name_map['char_' + str(i)] = 'char_' + str(i) + '_ppl'

ppl_df.rename(columns=char_old_name_new_name_map, inplace = True)

ppl_df.rename(columns={'group_1': 'group_ppl', 'date': 'date_ppl'}, inplace = True)

# Convert all columns except group_ppl and date_ppl to np.int8 type.

cast_cols = ppl_df.columns.difference(['group_ppl', 'date_ppl'])

ppl_df[cast_cols] = ppl_df[cast_cols].apply(lambda x: x.astype(np.int8))

# Convert group_ppl to np.int16 type

ppl_df['group_ppl'] = ppl_df['group_ppl'].astype(np.uint16)

return ppl_df

people_df = preprocess_ppl(people_df)

print('First five rows and eight columns of preprocessed people...')

people_df[people_df.columns[:8]].head()

Notice how all the prefixes are gone and the types are now integers. All 'NaN's are replaced with 0s.

Exploratory Data Analysis¶

Now that the data has been converted to an analysable form we can begin exploring it and understand it better. We will start by looking at the summary statistics of columns. We will use Pandas describe() function for this.

print('Statistical Summary of first six columns of preprocessed people data...')

people_df[people_df.columns[:7]].describe()

As you can see, the describe() function returns the number of items (count), boundary values (min, max) and some basic statistical values (mean, standard deviation and quantiles).

From this we get an idea of how the values are distributed in each column. For example, from char_1 summary statistics we can tell the following.

- looking at the min, max and the quantiles it appears to be a two valued column (1 & 2)

- looking at mean, std and quantiles there seem to be many more 2s that 1s

Boxplots are also a good way of understanding distributions. Let's have a look at the boxplots of numeric people characteristics.

fig, axes = plt.subplots(1, 2)

fig.set_size_inches(15, 5)

ppl_char_1_10 = ['char_' + str(i) + '_ppl' for i in range(1, 10)]

people_df[ppl_char_1_10].boxplot(return_type='dict', ax = axes[0]);

axes[0].set_title('Distribution of numerical People characteristics (char_1_ppl to char_9_ppl)');

people_df[['char_38_ppl']].boxplot(return_type='dict', ax = axes[1]);

axes[1].set_title('Distribution of numerical People characteristics (char_38_ppl)');

char_3, char_7, char_9 and char_38 seem to be somewhat evenly distributed compared to the others, although the medians (red lines) may not exactly align with the center value. char_38 has a bigger range compared to others.

Histograms are also commonly used to look at the distribution of individual features. Let's look at the distribution of 'group_1' using a histogram.

fig, axes = plt.subplots(1, 2)

fig.set_size_inches(15, 5)

people_df.group_ppl.hist(ax=axes[0], bins=100)

axes[0].set(xlabel = 'value', ylabel = 'count', title = 'group_ppl distribution');

people_df[people_df.group_ppl != 17304].group_ppl.hist(ax=axes[1], bins=100)

axes[1].set(xlabel = 'value', ylabel = 'count', title = 'group_ppl distribution (sans value=17304)');

Both the histograms show the distribution of values in group_1. The difference between the two graphs is that the first one includes all the values and has 10 bins, while the second one excludes one value (=17304) and has 100 bins.

The value 17304 has a huge count compared to other values - it is 50 times the value of the second most frequent value. See below.

print('Group Value Count')

people_df[people_df.group_ppl != 17304].group_ppl.value_counts().head()

There are 77314 people records that have the value 17304 in the group_1 column. The next most frequently used value, 667 appears in 1538 records, which is about 50 times less than the 17304 count. This kind of skewness in data makes visualising the distribution difficult - huge vertical bars suppress the smaller ones.

By excluding the odd value (17304) we can see a much nicer distribution of the values that were suppressed in the first graph. The distribution looks kind-of explonential, with values less than 1500 and between 16500 & 17000 occuring more frequently than others.

Let's have a look at activity characteristics.

act_chars_1_9 = ['char_' + str(i) + '_act' for i in range(1, 10)]

fig, axes = plt.subplots(1, 2)

fig.set_size_inches(12, 5)

act_train_df[act_chars_1_9].boxplot(return_type='dict', ax = axes[0]);

axes[0].set(title = 'Distribution of Activity Characteristics (char_1_act to char_19_act)',

xlabel = 'Columns', ylabel = 'Values');

act_train_df[['char_10_act']].boxplot(return_type='dict', ax = axes[1]);

axes[1].set(title = 'Distribution of Activity Characteristic 10 (char_10_act)',

xlabel = 'Columns', ylabel = 'Values');

The box plots look really strange. The first plot includes char_1 to char_9 plots and the second one is for char_10. The reason for separating out char_10 is the huge range of char_10 values.

See the following table for the unique value count of each characteristic column. char_10 range is about 500 times bigger than others.

act_chars = act_chars_1_9 + ['char_10_act']

unique_val_cnts = {}

for col in act_chars:

unique_val_cnts[col] = act_train_df[col].nunique()

unique_val_cnts_df = pd.DataFrame(unique_val_cnts, index=['# of unique values'])

print(unique_val_cnts_df)

To understand the strangeness of the boxplots let's take a second look at thier summary statistics.

print(act_train_df[act_chars].describe())

The first three quantiles that form the box boundaries in the boxplot are zeros in char_1 to char_9. Hence, we don't see the boxes. The max values represented by the horizontal marks that end the dashed lines in the boxplot vary from 5 (char_6 max value) to 52 (char_1 max value).

char_10 has very small values (1 & 2) for the first two quantiles, but has a bigger number for the third quantile. Hence, we only see upper part of the box. The lower part of the box is suppressed by the large value range.

Let's understand why the distribution of characteristics are so skewed. We might get some hint by looking at how they are spread across categories.

print(act_train_df.groupby('activity_category')[act_chars].apply(lambda x: x.apply(lambda y: np.sum(y != 0))))

What we are seeing in the table is the number of non-zero values of each charateristic in the seven activity categories. Note that a zero value in a characteristic cell indicates a missing/no data in the original dataset.

Following is what we can infer from this table.

- char_1 to char_9 have data only in category 1

- char_10 has no data in category 1 but has data in all other categories

So, char_1 to char_9 and char_10 are mutually exclusive. Let's now have a look at the distributions of char_1 to char_9 within category 1.

fig, axes = plt.subplots(3, 3)

fig.set_size_inches(15, 12)

for i in range(3):

for j in range(3):

axis = axes[i, j]

char_name = act_chars_1_9[i*3 + j]

act_train_df[act_train_df.activity_category == 1][char_name].hist(bins = 100, ax = axis)

axis.set(title = char_name, xlabel='Values', ylabel='Count')

plt.tight_layout()

What is very obvious from these plots is the variation in the range of values across the nine characteristics: char_4 to char_7 have fewer than 10 unique values, char_3, char_8 and char_9 have between 10 and 20 unique values, and char_1 and char_2 have a bigger range of unique values (greater than 30).

Another thing stands out in all the plots is that there one or a couple of values that occur much more frequently than the rest. For example, values 2 and 5 in char_1 and char 2, value 3 in char_4 have a much higher count than other values.

Let's now have a look at the distribution of char_10 in categories 2 to 7.

fig, axes = plt.subplots(2, 3)

fig.set_size_inches(15, 8)

for cat_num in range(2, 8):

axis = axes[(cat_num - 2) / 3, (cat_num - 2) % 3]

act_train_df[act_train_df.activity_category == cat_num]['char_10_act'].hist(bins = 100, log = True, ax = axis)

axis.set(title = 'activity_category = ' + str(cat_num), xlabel='Values', ylabel='Count')

plt.tight_layout()

Observations

- The char_10 column in all records of activity categories 2, 6 and 7 have values 1, 110 and 194, respectively. In other words, char_10 has a single unique value in these categories.

- The range of values in the other categories is pretty large - close to 10,000.

Many more things can be explored in this dataset. We haven't explored the relationship between People and Activity tables. We could, for example, check the distribution of People across activity categories, find the average number of activities that a person performs etc.

Since this post is getting too long I will stop here and continue developing this case study over the remaining two stages of the Data Science Process - Machine Learning and Reporting, in a follow-up post. Let's save the preprocessed data for consumption in the next post.

act_train_df.to_csv('../processed_data/activity_table.csv')

people_df.to_csv('../processed_data/people_table.csv')

Comments

comments powered by Disqus